“The Celebrated No-Hit Inning,” a 1956 science-fiction short story by Frederik Pohl, contains, as a prelude establishing his premise, a vivid sketch of machines taking over a job once thought open only to humans. An inventor showed up at a major-league ballpark with a robot batter. As skeptical players guffawed, the robot whiffed on the first batting-practice pitch it got, but the inventor said it just needed a minor adjustment and applied his screwdriver. Sure enough, the robot drove the next pitch sharply, and every pitch thereafter. In short order robots replaced every major leaguer, and soon the idea of a human ballplayer competing against a robot became laughable.

Robot athletes are still science fiction, but robots performing repetitive tasks such as on assembly lines, have been around for decades. Many repetitive white-collar tasks have also been automated by computer software. My particular interest is creative pursuits, once thought immune to replacement, where artificial intelligence (AI) is coming on strong and companies are racing to explore and monetize it. Indeed, the tsunami of developments in this field are too much for me to capture in this blog post. But I can offer my opinion.

How did we get here?

If you think about it, we’ve been following this path for thousands of years. The history of civilization consists of the replacement of human labor, first by animals and then by machines. Oxen plowed fields, which replaced foraging; horses carried travelers; looms wove cloth; engines replaced horses. The inevitable result was more output from fewer people, freeing us to pursue other endeavors.

Broadly speaking, these advances have been steady and successful. For example, at one time three quarters of the farmland in the United States was used for growing food for horses, and manure in city streets was an urgent public healthcare threat. We used horses as working animals right into World War II before we could replace their labor with engines. Since then the US horse population has declined by half, but I don’t think they would complain today about their lot as mostly recreational animals or pets, and the farmland is freed up for other crops. Similarly, mechanical devices have completely replaced many categories of manual labor. The overall impact has been great for consumers but not, at least in the short term, for the workers affected. The Industrial Revolution was recent enough that we have stories about the impact of job disruption on the displaced workers. But though we have many more people today, we mostly all can earn a living one way or another, because new jobs have been created that tend to be less dangerous and less repetitive.

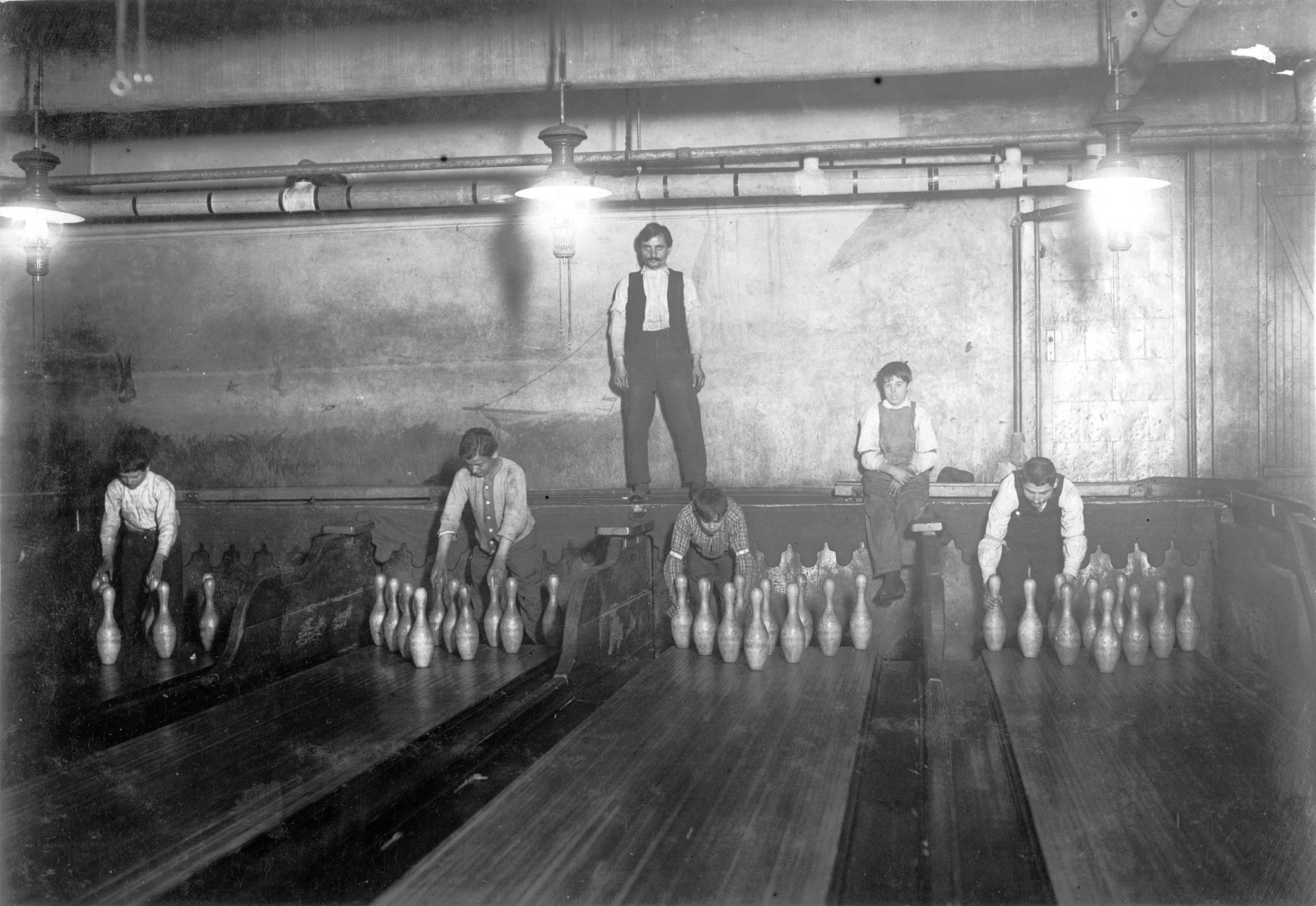

![One of the many job types supplanted by machines [Wikipedia] Four teenage boys setting pins by hand in a New York City bowling alley, 1910, under the supervision of a boss](https://upload.wikimedia.org/wikipedia/commons/a/a0/Pinboys_nclc.04636.jpg)

AI is improving at an incredible rate across a huge range of job titles. Are we next?

Admittedly, on a small scale things are not so rosy. As Harry Truman said, a recession is when your neighbor loses his job; a depression is when you lose yours. Replacement hasn’t happened yet in baseball (though automating ball-and-strike calls can’t come soon enough). But AI is improving at an incredible rate across a huge range of job titles. Hay farmers, pin setters, telephone operators, copyeditors, technical typists, technical illustrators—are we next? I want to focus on creative endeavors in general and writing—OK, technical writing—in particular.

A brief history of artificial intelligence is in order. The first generation of AI programs explored the limited world of game theory. It was trivially easy to code an unbeatable tic-tac-toe program, but more complex games proved challenging. A well-known example is chess. Because the set of all possible positions in a chess game is beyond storage even today, chess programs have to figure things out move by move. The first generation of programs were given the rules of the game, the relative value of pieces, and a library of known solid openings. Without “knowing” what constituted a promising line, the programs relied on clever algorithms for how to evaluate a position and select the best move and then brute hardware power (CPU processing speed, and memory) to evaluate positions as fast as possible. Still, it took nearly 50 years using that approach before Deeper Blue, an IBM program running on a supercomputer, defeated former world champion Garry Kasparov in an over-the-board match using regular time controls, and even at that it needed two tries.

Eliza was an early rules-driven natural-language program developed in 1966 by Joseph Weizenbaum at the MIT Artificial Intelligence Laboratory. Eliza was the first chatbot, designed to simulate conversation. Weizenbaum modeled its interaction style on a psychoanalyst, and it worked unexpectedly and disturbingly well: users engaged in personal conversations with Eliza and his secretary even asked to interact with it privately. In a foreshadowing of today’s events, users misconstrued what Eliza was doing.

I once worked for a company that provided building blocks for businesses to write rules-driven decision-making programs. If businesses could codify their decision-making processes, then they could automate those processes. Wouldn’t it be great if an insurance claims adjuster or a bank mortgage officer could have access to a program that always gave expert, by-the-book decisions, never skipping a step or indulging in favoritism? The issues that emerged with expert systems (not from my former employer but in general) were twofold. First, I say “access,” but of course businesses preferred to replace workers. Second, in practice expert systems coded with existing policies and trained on existing data were unwittingly given, and thereafter acted on, past errors and existing biases, either in their algorithms or in the input data. For example, one justice-system program (not based on our software!) designed to recommend pre-trial release decisions and evaluate the risk of recidivism was tougher on Blacks than whites given the same case facts. These kinds of problems left businesses exposed to lawsuits, the very thing they were trying to avoid.

The next generation of AI programs used huge amounts of data to make its decisions. This technology was exemplified by IBM Watson, which was fed millions of documents and given the raw computational power to search through them in real time. In 2011 Watson impressively defeated “Jeopardy!” champions Ken Jennings and Brad Rutter in regular game play.

The next advance in AI was to dispense with programmed rules in favor of a “neural network” that weighed connections in data and produced a “best” outcome. In 2017 AlphaZero, a purpose-built game program designed by DeepMind, was given the rules of chess and set to play against itself to learn what patterns of play led to victory. Crucially, DeepMind engineers played no role in AlphaZero’s development; it developed superhuman chess skill without benefit of a single programmed algorithm or even an opening library. After 44 million(!) self-practice games, the AI crushed Stockfish, the best chess program available, in a 100-game test match without losing a game. (In a thousand-game rematch played in 2018 with conditions more favorable to its opponent, AlphaZero routed the then-newest version of Stockfish with 155 wins, 839 draws, and only 6 losses.) The game logs showed that AlphaZero repeatedly evaluated positions and lines as winning that its opponent evaluated as winning for itself. Not even human chess masters understood AlphaZero’s “thinking.”

The newest generation of AI, which has captured the imagination of computer experts and laypersons alike, combines neural networks with training on huge amounts of domain information. What can it do?

- In a large study, an AI trained on mammograms more accurately predicted breast cancer risk than human radiologists.

- College admissions officers already expect that AI-generated admissions essays will figure prominently this year, and are planning to use AI tools to detect AI essays.

- Companies are already deploying AI screeners to screen job applications.

- A researcher prototyping AI drug discovery thinks he can reduce the cycle time from two years (and billions of dollars) to two weeks.

It seems no creative job is beyond AI’s reach. In art, photography, interior design, and other creative endeavors, AI’s advances have been stunningly fast, and in some cases have already reached the threshold of professional viability. AI services such as Midjourney, Stable Diffusion, and DALL-E can generate whole images in response to simple text queries. (Imagine using alt-text to generate an entire image.) One man used an AI to generate an image that won first prize in a digital fine-arts competition at the Colorado state fair, to the outrage of other contestants. In an unsettling recent interview, a game designer recounted balking at a graphic designer’s fee of $50 per image and turned to an AI to get what he wanted in a few minutes and for a few cents. How can anyone compete with that?

In November 2022, the company OpenAI (founded in 2015 and the creator of DALL-E) released its Generative Pre-Trained Transformer (GPT), trained as a large language model using ten trillion data points. ChatGPT generates responses by predicting, based on its input, the next likely word and building forward. Just six months later, ChatGPT is already responding to 100 million queries a week. Companies are using the latest version, ChatGPT-4, to create AI-powered chatbots in a dizzying array of fields. Microsoft, Google, and others are racing to incorporate AI into their core products. (Imagine a Microsoft Word that can not just format your report but write it for you.)

Because of its broad training, ChatGPT displays expertise in a huge range of fields. This infographic summarizes an OpenAI report on ChatGPT-4 and its impressive performance on selected professional examinations.

Copywriters, social-media content creators, and now editors are suffering the effects of AI competition. Scribendi AI is marketed as a productivity tool for overworked copyeditors. AuthorONE is marketed to publishers and offers manuscript assessment. Paperpal is aimed at academic writers, whose work can be formulaic. Sudowrite and Marlowe are aimed at fiction writers; the latter will optionally upload and anonymously store your manuscript in a “research database.” In these and other fields, AI offers—threatens?—to remove the middle folks entirely and generate output on its own, not in days but in minutes.

Issues in artificial intelligence

The capabilities of generative AI confound researchers, who can’t say where the responses are “coming from,” and who wonder if AIs have developed “theory of mind”—that is, are conscious. If this sounds unsettling to you, you’re not alone. On May 29, 2023, the nonprofit Center for AI Safety issued a sensational one-sentence open letter: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”—Center for AI Safety

It was signed by over 350 AI researchers and tech executives, including OpenAI CEO Sam Altman himself. Microsoft (of all companies!) has called for government regulation of the field. Alrighty then… What are the risks? Off the top of my head I can think of the Skynet scenario and the risk that jobs will be replaced or eliminated faster than workers, and the economy, can adapt to the change. Altman also cited the risk of spreading misinformation. Mark Twain said a lie can travel halfway around the world while the truth is putting on its shoes—and he never saw the Internet.

Like Johnny Five from “Short Circuit,” training an AI requires a lot of input, but it can’t evaluate the quality of what it’s fed. Garbage in, garbage out. Back in 2016 Microsoft trained an adaptive chatbot using general Twitter input and some comedic riffs and set it loose on Twitter to interact with users. The hope was that the program would learn to sound like just another user, but had they ever used Twitter? The results were disastrous: within hours, an organized troll attack corrupted it into spewing racist and sexist chatter, and in less than a day Microsoft pulled the plug. In theory, a small-scale AI could go through your company’s internal information and then answer queries about it, solving the common problem of navigating poorly organized Confluence pages or Sharepoint sites. But as a practical matter I know that regardless of how it’s stored, half the stuff in every company’s internal library is already obsolete. Quickly finding a wrong answer is not an improvement.

Predictive AI is non-deterministic. It produces output one likely word after another, but not the most likely next word. If you ask the same question twice in a row you can get slightly different responses. That’s a feature to avoid making AI sound robotic. But because of this, even OpenAI admits that ChatGPT, while it can create new content, can also “hallucinate” false statements of fact because it’s not actually checking. This is a fatal flaw in every field of endeavor where correct answers are required. Has Roger Federer won five Wimbledon singles titles or eight? Feed an AI a mix of current and outdated information and it might say both. For AI, hallucinations are a feature and a bug. So far, the quality triangle holds: AI produces content that’s fast, cheap, but too often bad.

Even high-quality input, taken from websites instead of chats, or amassed from billions of images scraped from the web, can be problematic. Today AI can create an image simply from a prompt describing the desired result, but many current examples don’t pass careful inspection. The prizewinning image above looks fantastic, but the closer you look the weirder it gets. Today AI can produce photorealistic output but has a hard time with hands. The field is advancing so fast that it might just take a minor adjustment, but it’s not perfect yet.

Where did all that information come from? Is it curated, or just indiscriminately collected? Examples have surfaced of AI-generated art that contains stock-photo watermarks. Josh Marshall of Turning Points Memo points out that much of the visual information that’s been hovered up is copyrighted, thus resulting in generative art demonstrably built through intellectual theft. I stand in solidarity with my graphic-arts colleagues to denounce this dishonest practice!

Similarly, I am personally nervous of startups that offer “help” with manuscripts. This may sound like a valuable service for a great price. But are you submitting your great American novel for editing or harvest? Are you even writing it?

If you ask a generative AI for a sonnet in the style of Shakespeare it can easily provide one because it has the data on what words the Bard used and how he strung them together. If you don’t specify a style subset, the results are still coherent but rely instead on the expressive skill of the crowd, which in practice tends toward the trite. Much worse, because statements of fact are predicted, not looked up, they ain’t necessarily so. Like Weizembaum’s secretary, early adapters of AI are misinterpreting what they’re getting.

In a recent legal case, an attorney in a civil action used ChatGPT to prepare a brief, but the AI actually generated nonexistent case citations and the other side noticed. Another case arose when a journalist trying out ChatGPT asked for a summary of a gun-rights case, and the answer stated that a certain Georgia radio host was accused of embezzling from one of the parties. The man actually had no involvement and filed a libel suit against OpenAI. The first lawsuit from a patient whose cancer goes undetected by an AI is inevitable, even if the AI is objectively better than a human. Conversely, what happens if a human double-checks and mistakenly overrides a correct AI diagnosis?

In the software field we know about “vaporware.” Elon Musk introduced vaporware to the automotive industry by announcing that the Tesla Autopilot was “fully operational” and released Full Self-Driving as beta, which many owners immediately began to use. Subsequent developments showed the technology was far from ready for public use. Autonomous control (definitely not my area of expertise) consists of the vehicle sensing its surroundings, interpreting sensor data, and using those interpretations to makedriving decisions. Cruise control, a simple application, has been available for decades; adaptive cruise control, where the vehicle slows or stops depending on what’s happening ahead, has been available for about ten years. But a fully self-driving car requires highly complex software with hundreds of subroutines making hundreds of driving decisions at unpredictable moments. As yet, it hasn’t been safe enough.

Colleges are already looking to setting AIs to catch AI-generated admissions essays. If existing data is tainted with human error, can’t we just create an AI to generate data for use by another AI? Researchers warn that an AI feedback loop could lead to “model collapse.”

Getting past AI’s formative years won’t end the problems. The invasion of Ukraine is the first full-scale drone war, and just as with airplanes in WWI, both sides have quickly escalated their role from reconnaissance to weapons delivery. So far, the drones on both sides are remotely piloted. But it’s possible to add AI to create autonomous devices that can identify enemy combatants on the battlefield and make kill/no kill decisions. (God help the civilian who emerges from his basement during a skirmish.) Naturally, people are hesitant to delegate life-and-death decisions to computers. As of this writing, the Pentagon hasn’t actually deployed such devices and instead relies on remote operators. But killer robots are entirely possible and for now are restricted only by doctrine.

How can we differentiate our work?

We’ve fought the battle of quality versus quantity for years, so this is familiar ground. I would suggest that it’s foolish to replace one good worker with ten bad ones for the same price, but in the current environment companies increasingly disagree. What, then, makes our work worth paying for?

So far, at least, ChatGPT struggles with English language and literature. We can’t expect our natural advantage to last forever, but we have it for now. Looking more deeply, large language models draw from many examples of common, trite phrases, which is reflected in their output. (Strunk and White is not overweighted in samples.) More deeply still, AI only simulates intelligence. Its output isn’t necessarily logical or even correct. AI output will need as much review, and probably more review, as the work of a human writer.

I worked at a startup whose CEO was reluctant to publish useful technical details because “our competitor will just steal it.” I had a problem with not helping our users and I said so. The STC Ethical Principles specifically calls us to create “truthful and accurate communications” under the core principal of honesty. Until now, members have interpreted this to mean “write facts, not marketing hype.” But today it differentiates us from AIs, because no self-respecting tech writer makes up facts. The ethical standards also call on practitioners to work to help users, implicitly guiding us not to work to hurt them. Given the current state of AI, that’s also a differentiator, because we know the difference between helping and hurting.

There’s a lot of input on the public web by both professional and amateur technical writers. I’ve already seen an attempt to use it to generate technical documentation. It’s easy to generate information in the style of Microsoft because the company has published a widely adopted style guide. But technical facts are company specific. If your company wants technical documentation in the style of your company and accurate for the next release of your product, it will first have to input its existing documentation, which may or may not be public, and then the specs for the next release, which are proprietary, copyrighted material. Otherwise, the new documentation will read like everything else on the web in both style and function. Is your company willing to feed proprietary material to a third party, or develop its own dedicated small language model AI, just to avoid paying a technical writer? That doesn’t seem cost effective or wise. To avoid model collapse, it doesn’t seem like humans can be removed from the content-creation equation any time soon. I like the idea that my work might become the gold standard somewhere!

Let’s assume AI technical output becomes as good as human-written output—cheaper, faster, and just as good. What then?

But let’s look past the period of minor adjustment. Let’s assume AI technical output becomes as good as human-written output—cheaper, faster, and just as good. What then?

As a technical communicator, there were topics I could write in my sleep, and possibly sometimes did. For example, documenting a new object in a management tool that follows the CRUD model of being able to create, read, update, and delete a manageable object meant I could document how to manage a new object by writing an overview of the object and then topics on how to create, display (or read), update (or edit), and delete the object. If the architectural model was well designed and followed, every task proceeded in parallel for each object, so I could copy and paste the text from an existing topic… Admit it, you’re asleep already. Once the development team establishes an established model (or pattern), a new error message, set of permissions, API method, or supported disk drive could be coded, tested, and documented by rote by junior workers. Work that follows a strong pattern and can be done by rote is very likely to be given to an AI. Hint: that includes the code. But as far as writing goes, these topics, which made up the bulk for my later assignments, were never the topics I looked forward to writing anyway. The complaint I’ve had, and heard, for years is that the rising volume and quickening pace of our work is overwhelming. If we don’t have to do the boring stuff, is that actually bad?

I question whether AI is a current threat to our profession. but we have to think of the future. Another approach to the challenge of AI is to level up, not surrender. As one marketing slogan put it: “AI won’t replace you. A person using AI will.” If you can consider AI as just the latest in a line of productivity tools going back to the typewriter, you can take advantage and get through the disruption. (I wonder what to say to new practitioners, as the skills required to enter the profession are increasing still further; but they don’t have to unlearn old skills.)

It’s going to be a bumpy transition. But I think we will get through to the other side. Perhaps there are higher pursuits to which we can turn our attention.